In this blog post, I’m going to quickly explain positive and negative classes in machine learning classification.

I’ll explain what the positive and negative classes are, how they relate to classification metric, some examples of positive and negative in real-world machine learning, and more.

If you need something specific, just click on one of these links:

Table of Contents:

- A Quick Review of Classification

- Defining Positive and Negative

- Real World Examples of Positive and Negative Classes

- How Positive and Negative Classes Relate to Classification Metrics

That said, let’s dive in.

We’ll start with a review of classification and build from there.

A Quick Review of Classification

In machine learning, classification stands as one of the most fundamental and commonly performed tasks.

At its core, classification involves categorizing data into predefined classes or categories, making it important in several applications, from email filtering to medical diagnostics.

The essence of classification lies in its ability to evaluate incoming data examples and assign the correct label that defines what the example represents.

Central to the concept of classification in machine learning is the understanding of positive and negative classes. This is especially true for binary classification problems (although we often need the concepts of “positive” and “negative” classes for multiclass classification as well, which I will briefly touch on later).

Binary classification – the simplest form of classification – involves categorizing incoming data examples into two distinct groups, or classes.

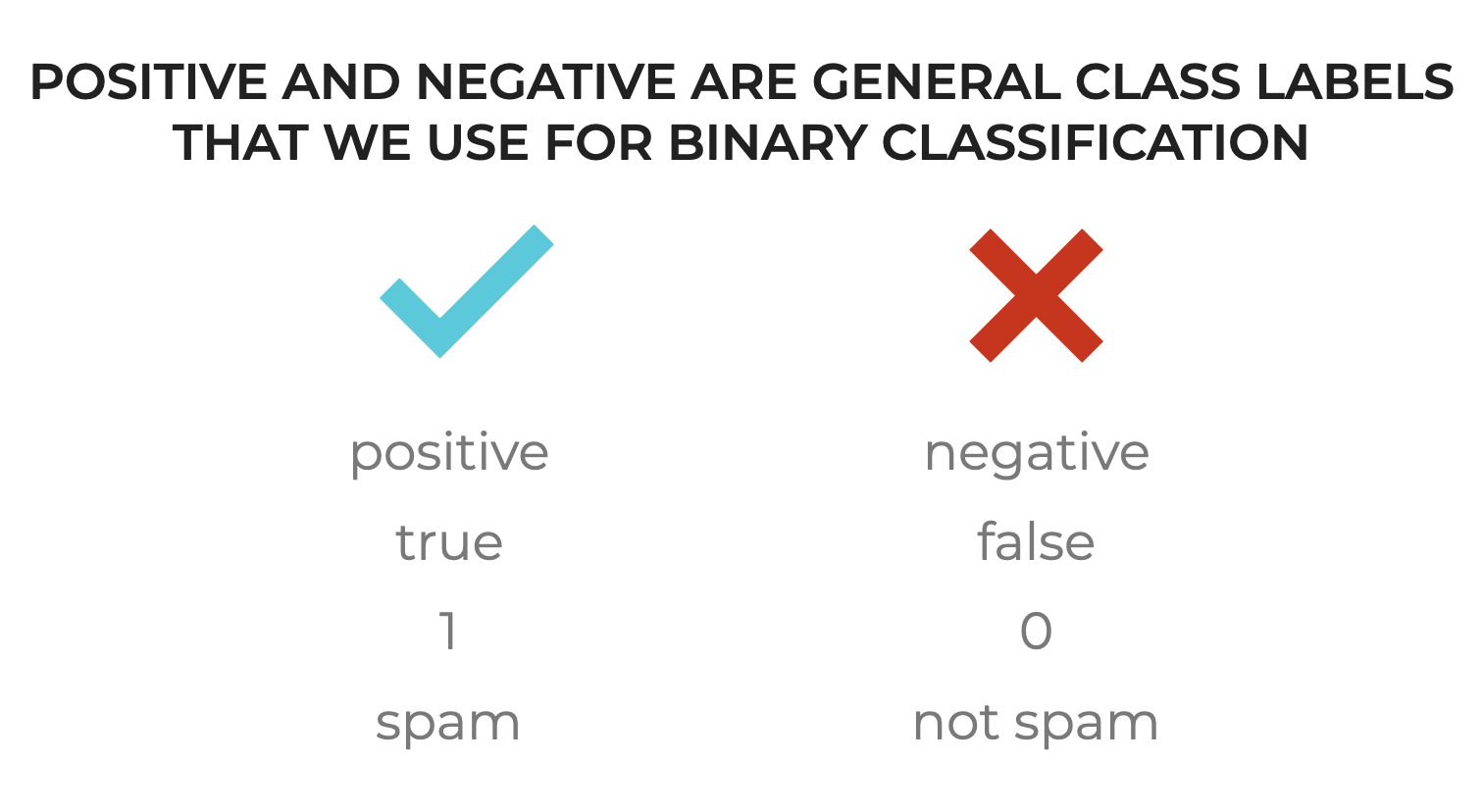

We commonly call these classes the “positive” class and the “negative” class.

Defining ‘Positive’ and ‘Negative’

So, let’s quickly define what we mean by positive and negative.

In the context of classification, “positive” refers to the class or outcome that the model is primarily interested in detecting or predicting. It represents the presence or occurrence of the specific characteristic or condition we’re trying to identify.

In contrast, “negative” denotes the absence or non-occurrence of the characteristic that we’re tying to identify. Essentially, the “negative” class is largely complementary to the positive class. The negative class is important because it helps the model learn what features are unassociated with the condition of interest.

A couple of real-world examples of positive and negative classes

Let’s quickly run through a couple of real-world examples.

Spam Detection

Let’s say that we’re performing a spam detection task, where we’re trying to distinguish between “spam” (i.e., junk email) and “non-spam” (AKA, “ham”, which is email you want to receive).

In such a task, positive and negative would be:

- positive: a spam email

- negative: a non-spam email (AKA, ham)

Cancer Detection

Let’s try another one.

Let’s say that we’re trying to detect the presence of cancer in a diagnostic test.

In such a task, the positive and negative classes would refer to:

- positive: presence of cancer

- negative: absence of cancer

Fraud detection

Let’s do one more.

Let’s assume that we’re working at a bank and we’re trying to detect fraudulent transactions.

In such a task, positive and negative would typically be:

- positive: presence of fraud

- negative: absence of fraud

The positive class typically signifies the presence of the quality under investigation

Notice that in all of the examples we just looked at, the positive class signifies the presence of some condition or quality.

To be clear: there may be tasks or situations where this is not the case, but most of the time the positive class encodes the presence of something.

More specifically, it signifies the presence of something that we’re trying to detect or identify.

Alternative names for positive/negative

We often use the terms positive and negative in the context of classification as terms that generalize across multiple tasks.

So, as seen above, for spam classification, we can generally use the term “positive” to specify a spam email and “negative” to specify a non-spam email.

Having said that, given a specific task, we may use the less general labels instead of the general positive/negative labels.

So in spam classification, we might use “spam”/”non-spam”.

Or in fraud detection, we might use “fraud”/”no-fraud”.

In these cases, you need to realize that these task-specific class labels actually map to “positive” and “negative.”

So whenever you’re doing binary classification, if you see task-specific class labels, you need to think about and be mindful of which class is actually “positive” and which is “negative” for the purposes of the task.

Having said that, there are situations where it’s less clear which class should be considered positive and negative. There are circumstances where it’s somewhat arbitrary or much less clear which class to treat as positive and which to treat as negative.

Positive and Negative are Important for Classification Metrics

Ok.

Positive and negative.

You might be thinking “it makes sense, so what?”

As it turns out, almost all classification evaluation metrics depend on this framing of classes as positive and negative.

How Positive/Negative relate to Confusion Matrixes

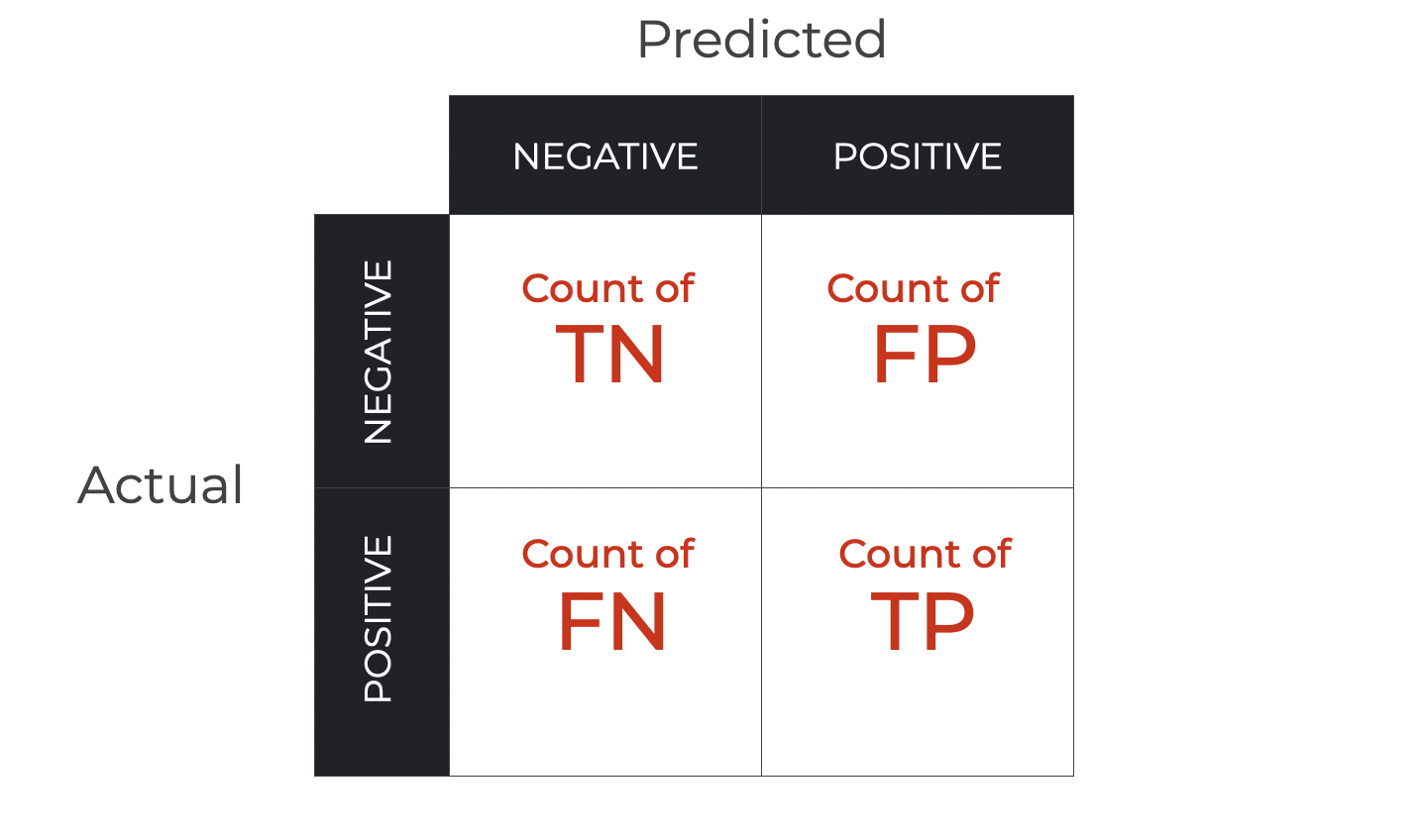

For example, in a confusion matrix, we categorize all predictions according to the value of the actual class (positive or negative), and also the predicted class (positive or negative).

And by categorizing examples this way, we get the 2×2 confusion matrix:

Moreover, as seen above, by categorizing all examples according to the positive/negative values of the actual class and predicted class, we get 4 different types of predictions:

- True Positive: Predict

positivewhen the actual input is positive - True Negative: Predict

negativewhen the actual input is negative - False Positive: Predict

positivewhen the actual input is negative - False Negative: Predict

negativewhen the actual input is positive

And these 4 different classification prediction types – True Positive, True Negative, False Positive, and False Negative – are themselves important for other metrics.

Positive and Negative Classes in Precision, Recall and F1 Score

So the terms positive and negative are in turn important for classificaiton metrics, because almost all classification metrics are built on the quantities of True Positives, True Negatives, False Positives, and False Negatives.

Precision

For example, precision is the proportion of positive predictions that were correct.

(1) ![]()

As seen above, we calculate it with True Positives, and False Negatives (which obviously require us to understand the distinction between positive and negative classes).

Recall

Recall is the proportion of actual positive examples that we correctly classify as positive.

(2) ![]()

Again: this requires us to understand positives and negatives.

F1 Score

Finally, we have F1 score, which balances between precision and recall.

(3) ![]()

And we see that to calculate F1, we need True Positives, False Positives, and False Negatives.

There are other classification metrics as well, but these are three of the most important.

And as you’ve seen, to calculate them, you need to have the number of True Positives, False Positives, and False Negatives. In turn, these require the positive/negative class distinction.

How this Fits with Multi-Class Classification

Finally, let’s briefly link this to multi class classification.

How do positives and negatives work for classification tasks with more than 2 classes?

Well, it’s complicated.

There are actually a few ways to do multi-class classification.

But, as it turns out, they still use these “positive” and “negative” classes.

One vs the rest

In one vs the rest, we treat one of the classes as “positive” and we group together all of the other classes as “negative”.

We literally analyze one class as “positive” vs the rest.

This allows us to use the positive/negative classes, which in turn allows us to use the classification metrics that I described above.

One vs One

Additionally, we can perform one vs one.

In one vs one, we analyze one class vs another class, one pair at a time.

So if we have 3 classes – A, B and C – we can analyze A vs B. A vs C. B vs C.

This turns a multi class situation into a set of binary classification tasks. In turn, that enables us to characterize one of each pair as positive and the other as negative, which then allows us to use classification metrics like precision, recall, etc.

Wrapping Up: Why Positive and Negative Classes are Important for Machine Learning

The concepts of positive and negative classes are critical for understanding classification. Positive and negative are needed directly binary classification, but as noted above, the concepts can be extended as well to multiclass situations.

I’m a firm believer in the principle of “master the fundamentals” and then build your knowledge up from there.

These concepts of positive and negative are like machine learning bedrock. You need to know what they mean, and in turn, how they relate to classification metrics, etc.

Having said that, there’s probably some detail and nuance that I’ve left out, so you should sign up for our email newsletter to get all of our future ML articles.

Leave Your Questions and Comments Below

Having said all of that, do you have other questions about positive and negative in machine learning?

Are you still confused about something?

I want to hear from you.

Leave your questions and comments in the comments section at the bottom of the page.

Sign up for our email list

If you want to learn more about machine learning and AI, then sign up for our email list.

Every week, we publish free tutorials about a variety of topics in machine learning, data science, and AI, including:

- Scikit Learn

- Numpy

- Pandas

- Machine Learning

- Deep Learning

- … and more

If you sign up for our email list, then we’ll deliver those free tutorials to you, direct to your inbox.

Hi!

I don’t exactly understand the one vs one method in multiclass classification. How to build a confusion matrix if you test A versus B and the actual value is C?

Can you clarify it?

I’m going to write more about the complexities of multiclass classification in another post.